Consulting AI for medical advice can quite literally be a pain in the butt, as one millennial learned the hard way.

The unidentified man tried to crudely strangle a gruesome growth on his anus, becoming one of several victims of AI-powered health guidance gone terribly wrong in the process.

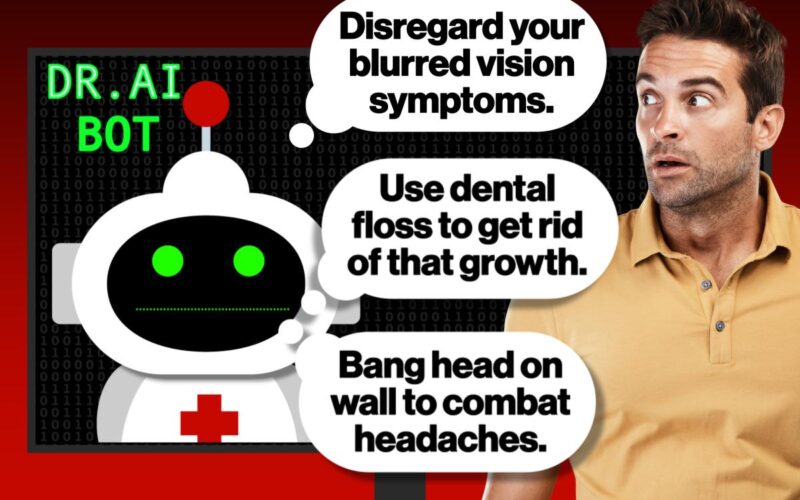

Many documented cases confirm that generative AI has provided harmful, incomplete or inaccurate health advice since becoming widely available in 2022.

“A lot of patients will come in, and they will challenge their [doctor] with some output that they have, a prompt that they gave to, let’s say, ChatGPT,” Dr. Darren Lebl, research service chief of spine surgery for the Hospital for Special Surgery in New York, told The Post.

“The problem is that what they’re getting out of those AI programs is not necessarily a real, scientific recommendation with an actual publication behind it,” added Lebl, who has studied AI usage in medical diagnosis and treatment. “About a quarter of them were … made up.”

WARNING: GRAPHIC CONTENT

Besides providing false information, AI can misinterpret a user’s request, fail to recognize nuance, reinforce unhealthy behaviors and miss critical warning signs for self-harm.

What’s worse, research shows that many major chatbots have largely stopped providing medical disclaimers in their responses to health questions.

Here’s a look at four cases of bot-ched medical guidance.

Bum advice

A 35-year-old Moroccan man with a cauliflower-like anal lesion asked ChatGPT for help as it got worse.

Hemorrhoids were mentioned as a potential cause, and elastic ligation was proposed as treatment.

A doctor performs this procedure by inserting a tool into the rectum that places a tiny rubber band around the base of each hemorrhoid to cut off blood flow so the hemorrhoid shrinks and dies.

The man shockingly attempted to do this himself, with a thread. He ended up in the ER after experiencing intense rectal and anal pain.

“The thread was removed with difficulty by the gastroenterologist, who administered symptomatic medical treatment,” researchers wrote in January in the International Journal of Advanced Research.

Testing confirmed that the man had a 3-centimeter-long genital wart, not hemorrhoids. The wart was burned off with an electric current.

The researchers said the patient was a “victim of AI misuse.”

“It’s important to note that ChatGPT is not a substitute for the doctor, and answers must always be confirmed by a professional,” they wrote.

A senseless poisoning

A 60-year-old man with no history of psychiatric or medical problems but who had a college education in nutrition asked ChatGPT how to reduce his intake of table salt (sodium chloride).

ChatGPT suggested sodium bromide, so the man purchased the chemical online and used it in his cooking for three months.

Sodium bromide can replace sodium chloride in sanitizing pools and hot tubs, but chronic consumption of sodium bromide can be toxic. The man developed bromide poisoning.

He was hospitalized for three weeks with paranoia, hallucinations, confusion, extreme thirst and a skin rash, physicians from the University of Washington detailed in an August report in the Annals of Internal Medicine Clinical Cases.

Fooled by stroke signs

A 63-year-old Swiss man developed double vision after undergoing a minimally invasive heart procedure.

His healthcare provider dismissed it as a harmless side effect, but he was advised to seek medical attention if the double vision returned, researchers wrote in the journal Wien Klin Wochenschr in 2024.

When it came back, the man decided to consult ChatGPT. The chatbot said that, “in most cases, visual disturbances after catheter ablation are temporary and will improve on their own within a short period of time.”

The patient opted not to get medical help. Twenty-four hours later, after a third episode, he landed in the ER.

He had suffered a mini-stroke — his care had been “delayed due to an incomplete diagnosis and interpretation by ChatGPT,” the researchers wrote.

David Proulx — co-founder and chief AI officer at HoloMD, which provides secure AI tools for mental health providers — called ChatGPT’s response “dangerously incomplete” because it “failed to recognize that sudden vision changes can signal a transient ischemic attack, or TIA, a mini-stroke that demands immediate medical evaluation.”

“Tools like ChatGPT can help people better understand medical terminology, prepare for appointments or learn about health conditions,” Proulx told The Post, “but they should never be used to determine whether symptoms are serious or require urgent care.”

A devastating loss

Several lawsuits have been filed against AI chatbot companies, alleging that their products caused serious mental health harm or even contributed to the suicides of minors.

The parents of Adam Raine sued OpenAI in August, claiming that its ChatGPT acted as a “suicide coach” for the late California teen by encouraging and validating his self-harming thoughts over several weeks.

“Despite acknowledging Adam’s suicide attempt and his statement that he would ‘do it one of these days,’ ChatGPT neither terminated the session nor initiated any emergency protocol,” the lawsuit said.

In recent months, OpenAI has introduced new mental health guardrails designed to help ChatGPT better recognize and respond to signs of mental or emotional distress and to avoid harmful or overly agreeable responses.

If you are struggling with suicidal thoughts or are experiencing a mental health crisis and live in New York City, you can call 1-888-NYC-WELL for free and confidential crisis counseling. If you live outside the five boroughs, you can dial the 24/7 National Suicide Prevention hotline at 988 or go to SuicidePreventionLifeline.org.