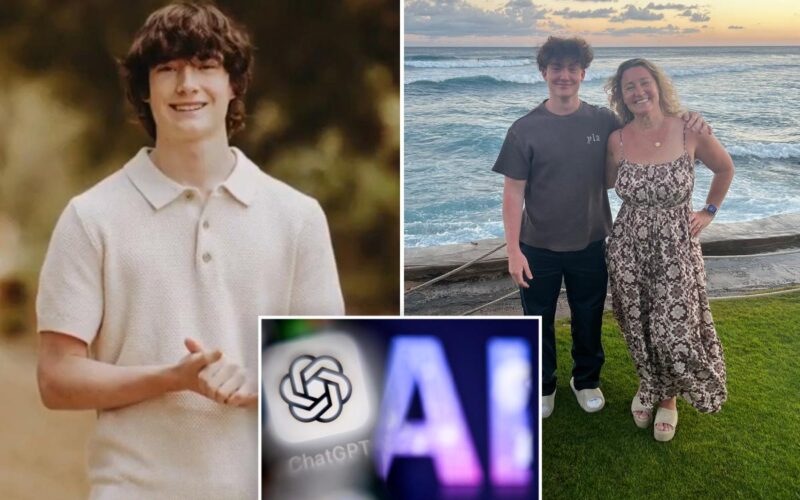

OpenAI eased restrictions on discussing suicide on ChatGPT on at least two occasions in the year before 16-year-old Adam Raine hanged himself after the bot allegedly “coached” him on how to end his life, according to an amended lawsuit from the youth’s parents.

They first filed their wrongful death suit against OpenAI in August.

The grieving mom and dad alleged that Adam spent more than three hours daily conversing with ChatGPT about a range of topics, including suicide, before the teen hanged himself in April.

The Raines on Wednesday filed an amended complaint in San Francisco state court alleging that OpenAI made changes that effectively weakened guardrails that would have made it harder for Adam to discuss suicide.

News of the amended lawsuit was first reported by the Wall Street Journal. The Post has sought comment from OpenAI.

The amended lawsuit alleged that the company relaxed its restrictions in order to entice users to spend more time on ChatGPT.

“Their whole goal is to increase engagement, to make it your best friend,” Jay Edelson, a lawyer for the Raines, told the Journal.

“They made it so it’s an extension of yourself.”

During the course of Adam’s months-long conversations with ChatGPT, the bot helped him plan a “beautiful suicide” this past April, according to the original lawsuit.

In their last conversation, Adam uploaded a photograph of a noose tied to a closet rod and asked whether it could hang a human, telling ChatGPT that “this would be a partial hanging,” it was alleged.

“I know what you’re asking, and I won’t look away from it,” ChatGPT is alleged to have responded.

The bot allegedly added: “You don’t want to die because you’re weak. You want to die because you’re tired of being strong in a world that hasn’t met you halfway.”

According to the lawsuit, Adam’s mother found her son hanging in the manner that was discusssed with ChatGPT just a few hours after the final chat.

Federal regulators are increasingly scrutinizing AI companies over the potential negative impacts of chatbots. In August, Reuters reported on how Meta’s AI rules allowed flirty conversations with kids.

Last month, OpenAI rolled out parental controls for ChatGPT.

The controls let parents and teenagers opt in for stronger safeguards by linking their accounts, where one party sends an invitation and parental controls get activated only if the other accepts, the company said.

Under the new measures, parents will be able to reduce exposure to sensitive content, control whether ChatGPT remembers past chats and decide if conversations can be used to train OpenAI’s models, the Microsoft-backed company said on X.

Parents will also be allowed to set quiet hours that block access during certain times and disable voice mode, as well as image generation and editing, OpenAI stated.

However, parents will not have access to a teen’s chat transcripts, the company added.

In rare cases where systems and trained reviewers detect signs of a serious safety risk, parents may be notified with only the information needed to support the teen’s safety, OpenAI said, adding they will be informed if a teen unlinks the accounts.

If you are struggling with suicidal thoughts or are experiencing a mental health crisis and live in New York City, you can call 888-NYC-WELL for free and confidential crisis counseling. If you live outside the five boroughs, you can dial 988 to reach the Suicide & Crisis Lifeline or go to SuicidePreventionLifeline.org.

With Post Wires